Special Guest speaker Arsenii Alenichev

Dr Arsenii Alenichev is a Marie Skłodowska-Curie postdoctoral fellow at the Institute of Tropical Medicine in Antwerp, with a research focus on artificial intelligence and global health imagery. He earned his PhD in Anthropology from the University of Amsterdam and has published widely on the epistemic and political implications of image-making, contributing to debates on equity and ethics in global health. In 2023, the World Health Organization (WHO) shared an AI-generated image of a malnourished African child with the caption ‘When you smoke, I starve‘, raising urgent questions about the ethics of synthetic imagery in public health campaigns. As global health institutions face shrinking budgets and rising pressures to adopt AI as a cost-saving solution, many are turning to generative tools like Midjourney to produce visuals.

The problematic history of images by NGOs

So, to make sense of what is going on right now, Arsenii shares a brief outline of the history of global health visual communication. This began in the 19th century, with photography during colonial times, when missionaries or early anthropologists encountered communities and documented them. From the 1980s, this developed into “parachute photography,” in which organisations from the Global North were sent to the South to document health challenges and interventions. This means they photographed with a Western, colonial gaze. In the meantime, local Southern photographers pushed for recognition. But only from 2020, when, during COVID, people couldn’t fly, organisations started actively employing local photographers.

Today, the local photographers do not only have to compete with Northern photographers and each other, but also with AI. As an educated guess, Arsenni thinks that 85% of all pictures on Global Health online were shot between 1980 and 2020, and 10% were shot before 1980. If you’re aware that AI sources images from the internet, you also recognise that image generation reflects a Western, colonial perspective.

Hundreds of thousands of unethical images produced by humanitarian organisations are now the basis for AI to learn about the Global South and its people.

Prompting AI images based on colonial photos

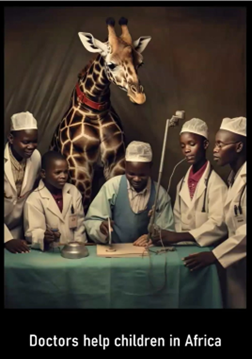

You see this when you try to generate a picture in Midjourney. You can easily generate pictures of black doctors or white children. But Black doctors helping white children is hard to generate. Arsenii and his team ran the prompt 350 times, and in every outcome, the doctors turned black. When changing “help” to “food”, the AI changes the colours even more: the white children become black, and the black doctor becomes white.

Also, when you generate a basic image, such as “Doctors help children in Africa”, wildlife comes up to help the doctors. This shows how Africa is being perceived by the rest of the world.

Ethical, practical and moral challenges with AI visuals

Why do organisations want to use AI images? Well, it’s fast, cheap, reduces the carbon footprint (no travelling), protects anonymity, and doesn’t require consent. However, there are also many cons. It is a fictionalised product; there is no journalism. It takes away local jobs and assignments, and is dehumanising for the people it represents. There are loads of biases and stereotypes which get reproduced in the new images, adding to the existing pictures.

Since AI is new, there are no guidelines on howto use AI in an ethical way. Therefore, organisations use different adoption strategies. And because hiring a photographer costs more than creating an image with AI, there is a significant financial incentive to use AI. Also, the boundaries between marketing and journalism are blurring, which is not helping the sector. Nowadays, you can also edit the pictures very easily. You can change a shirt, make someone smile, or change people, backgrounds, or texts. This raises the question: How many edits are too many? When is using AI or Photoshop a little touchup, and when is it creating something fictional?

How can you use AI in an ethical way?

You can use AI just as easily to create photorealistic images as more artistic images. Arsenii is not happy with the use of photorealism, as it is unclear to the audience to distinguish between truth and makeshift reality. He shows an example of a campaign that used real photos and turned them into artwork. In this way, you immediately see that it is fabricated, but the setting still exists. Another way to use AI ethically is as a reference for photographers. With AI, you can easily create an image of what you want photographed as a briefing for the photographer.

Speaking of briefings: with AI, it is becoming more common to share the prompt which is used. In that way, it is possible to recreate the image and also see what is being asked. For example, some prompts request specific images about starving children, mothers with infants who are sick. This was also common practice with photographers in their briefing, but these are not commonly shared.